AI

AI

AI

AI

AI

AI

Accenture Plc Tuesday announced the launch of the Accenture AI Refinery framework, developed on Nvidia Corp.’s new AI Foundry service. The offering, designed to enable clients to build custom large language models using Llama 3.1 models, enables enterprises to refine and personalize these models with their own data and processes to create domain-specific generative AI solutions.

In a briefing, Kari Briski, vice president of AI software at Nvidia, said she’s often asked about the buzz surrounding generative AI.

“It’s been a journey,” she said. “Yes, generative AI has been a big investment. And enterprises ask, ‘Why should we do it? What are the use cases?’ When you think about employee productivity, have you ever wished that you had more hours in the day? I know that I do. Maybe if there were 10 of you, you could get more things done. And that’s what generative AI helps — automate repetitive, mundane tasks, things like summarization, best practices and next steps.”

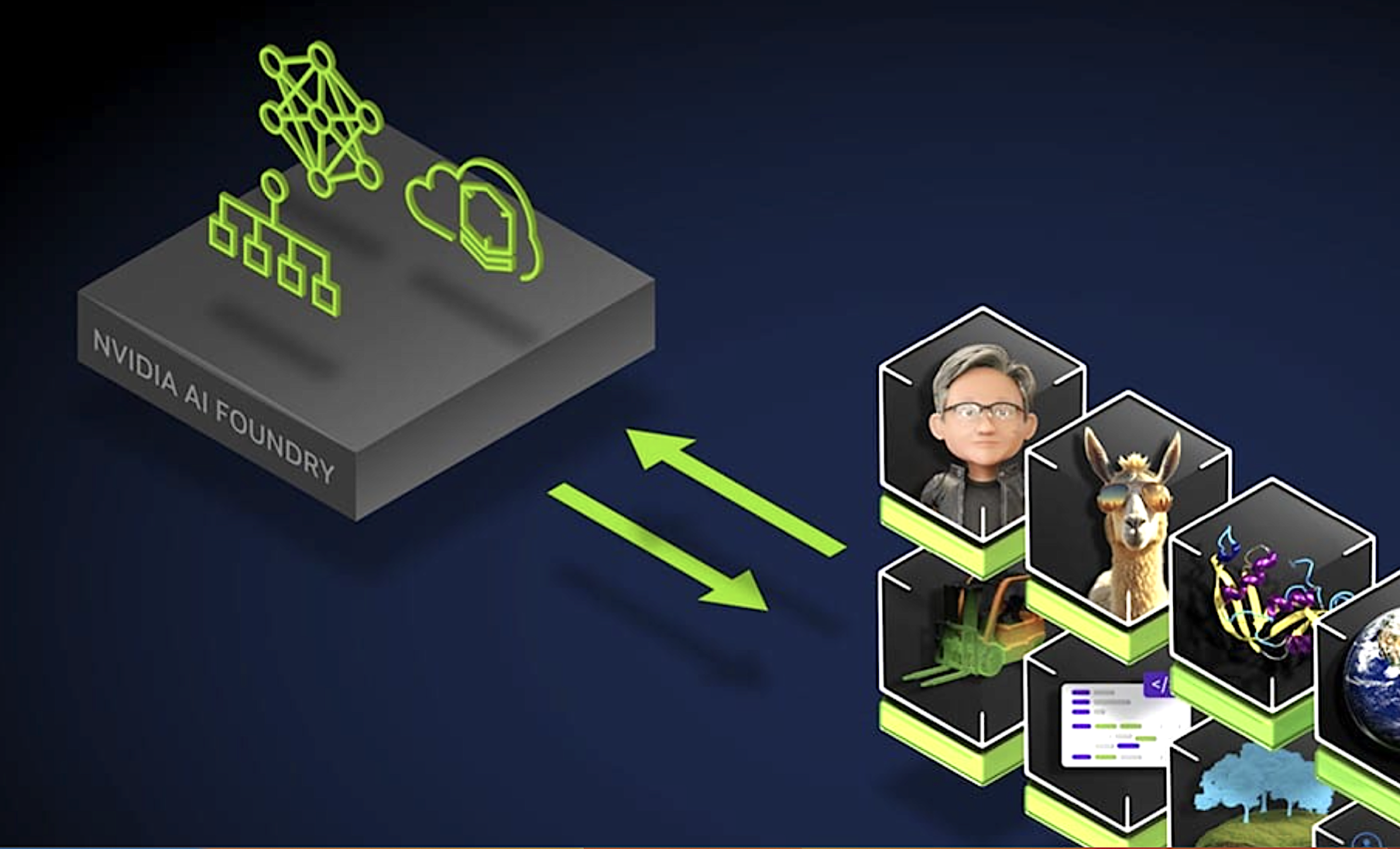

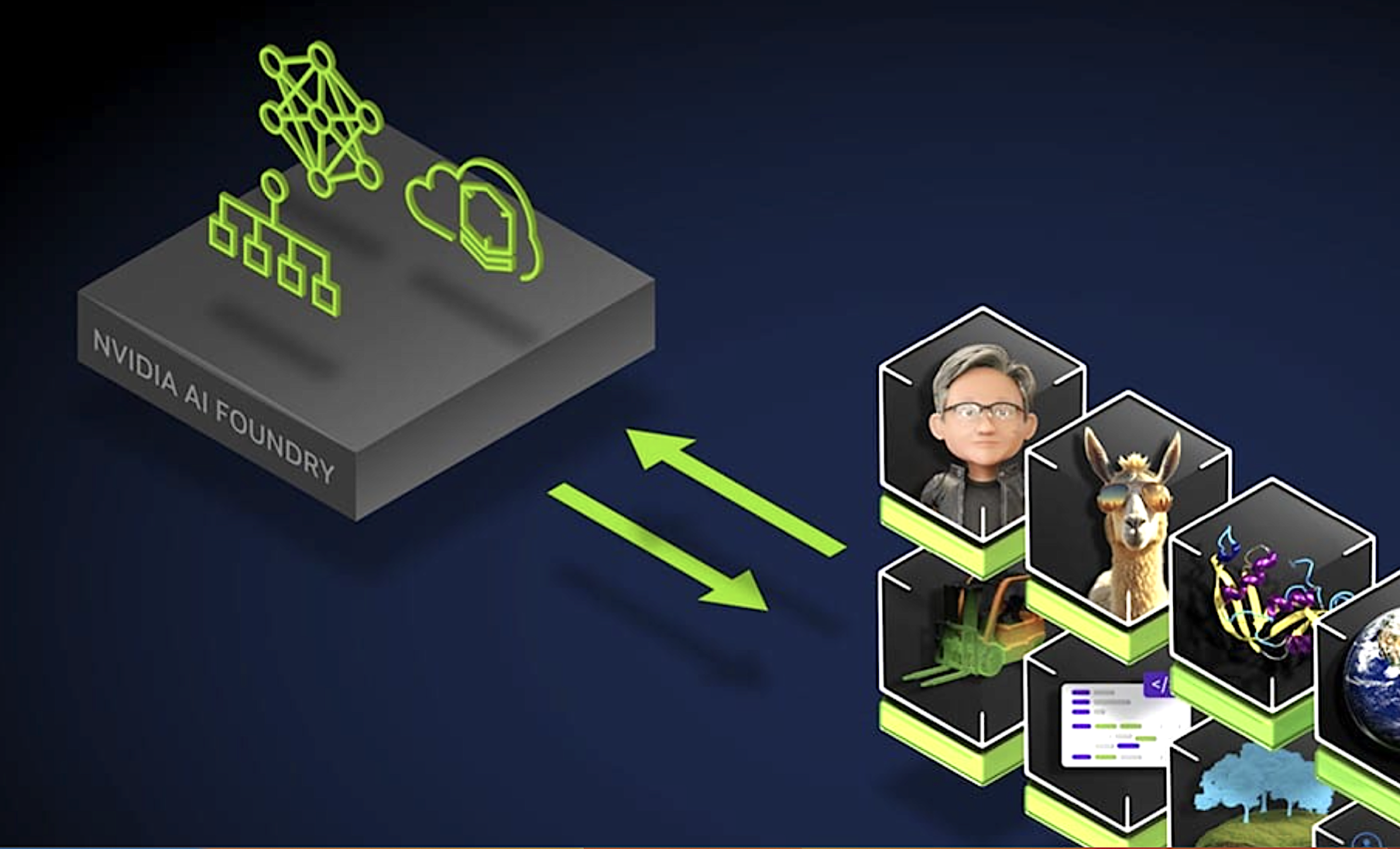

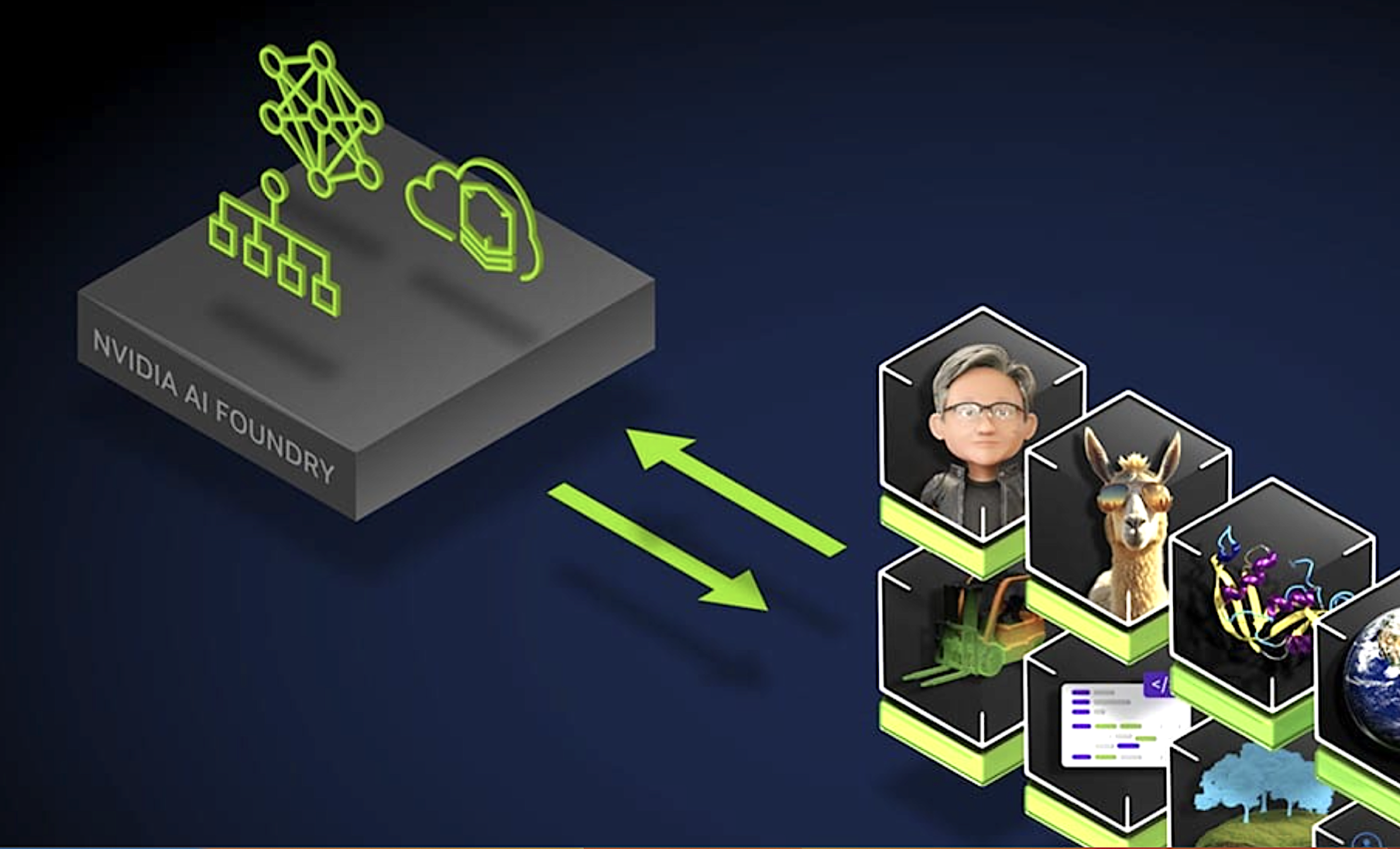

“Nvidia AI Foundry is a service that enables enterprises to use accelerated computing and software tools combined with our expertise to create and deploy custom models that can be supercharged for enterprises’ generative AI applications,” Briski said.

The AI Foundry platform offers an infrastructure for developing and deploying custom AI models. It includes:

Briski said that once companies customizes the model, they must evaluate it. This is where some customers get stuck, she noted. She recounted some of the things she’s heard from customers: “‘How well is my model doing? I just customized it. Is doing the things that I need?’ So the NeMo customers are offered many ways to evaluate, with academic benchmarks, you can upload your own custom evaluation benchmarks, you can connect to a third-party ecosystem of human evaluators, and then you can also use an LLM as a judge.”

As Briski indicated in the briefing, several companies are using AI Foundry, including Amdocs, Capital One and ServiceNow. According to Nvidia, these three are integrating AI Foundry into their workflows. The company says they’ve gained a competitive edge by developing custom models that incorporate industry-specific knowledge.

Nvidia’s NIM has some unique advantages that Briski discussed.

“NIM is a customized model and container accessed by a standard API,” she explained. “And this is the culmination of years of work and research that we’ve done.” She said she has been at Nvidia eight years and the company has been working on it at least that long.

“It’s on a cloud-native stack, it runs out-of-the-box on any GPU,” she said. “That’s across our 100 million-plus installed base of Nvidia GPUs. Once you have NIM, you can customize and add models very quickly.”

She added NIM now supports Llama 3.1, including Llama 3.1 8B NIM (a single GPU LLM), Llama 3.1 70B NIM (for high accuracy generation) and Llama 3.1 405B NIM (for synthetic data generation).

In addition, Accenture announced it worked with Nvidia on the AI Refinery framework, which runs on the AI Foundry. Accenture said the framework advances the field of gen AI for enterprises. Integrated within Accenture’s foundation model services, it promises to help businesses develop and deploy custom LLMs tailored to their requirements. According to both companies, the framework includes four key elements:

Accenture’s AI Refinery framework has an opportunity to change enterprise functions, starting with marketing and expanding to other areas. The ability to create and deploy generative AI applications quickly that are tailored to specific business needs underscores Accenture’s commitment to innovation and transformation. By applying the framework internally before offering it to clients, Accenture shows the potential it sees.

In the announcement, Julie Sweet, chair and chief executive officer of Accenture, highlighted the transformative potential of generative AI in reinventing enterprises. She emphasized the importance of deploying applications powered by custom models to meet business priorities and drive industry-wide innovation.

In addition, Jensen Huang, founder and CEO of Nvidia, noted that Accenture’s AI Refinery would provide the necessary expertise and resources to help businesses create custom Llama LLMs.

Accenture’s launch of the AI Refinery framework could be pivotal in adopting and deploying generative AI in enterprises. By employing the Llama 3.1 models, which Briski applauded on the briefing, and the capabilities of the AI Foundry, Accenture enables businesses to create highly customized and effective AI solutions.

As enterprises continue to explore the potential of generative AI, frameworks such as Accenture’s AI Refinery will play a crucial role in enabling customized and effective AI solutions.

The collaboration between Accenture and Nvidia promises to drive further advancements in AI technology, offering businesses avenues for growth and innovation. It also underscores that all AI roads lead to Nvidia.

Zeus Kerravala is a principal analyst at ZK Research, a division of Kerravala Consulting. He wrote this article for SiliconANGLE.

THANK YOU